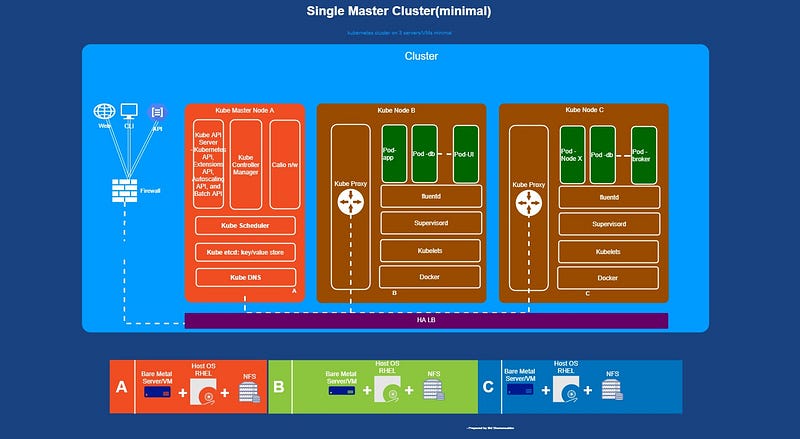

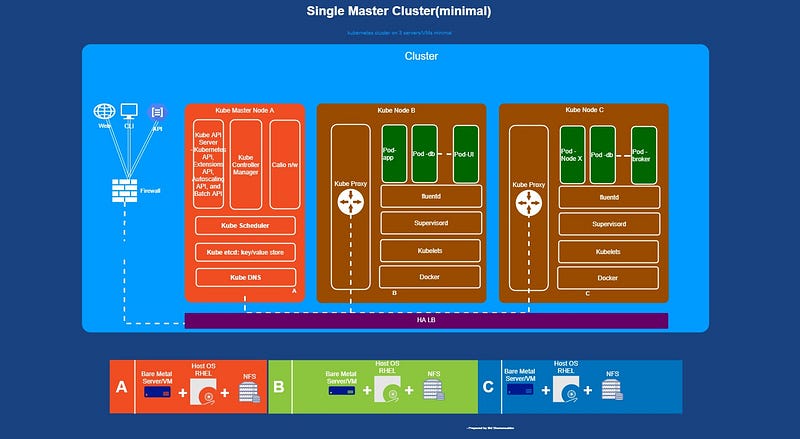

Setting Up Kubernetes Cluster — single cp or multiple cp

K8s(1.17.3) cluster on RHEL7.6/CENTOS7

This blog explains how to setup kubernetes cluster with single/multiple(HA) control plane.

Prerequisites

- RHEL 7 or CentOS bare metal server or vm with internet connectivity.

- HA proxy installed in one of the VM which would be used as LB for multi control plane/master high availability Kubernetes cluster

Common steps before configure master/worker node(s)

- Install container runtime & network interface

- Install kubeadm, kubelet, kubectl

- Disable swap and firewall

Please follow the below steps to setup a single control-plane cluster with kubeadm

- Single Master node setup

- Add worker(s) with single master

Please follow the below steps to setup a multiple control-plane high availability cluster with kubeadm

- Load balancer configuration

- Initiation of 1st master of multi CP cluster

- Join additional master(s) with multi cp cluster

- Join worker(s) with multi cp cluster

Please scroll below for tasks to be performed in each above step.

Step : Install container runtime & network interface

# install runtime

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# below step only for rhel with subscription manager enabled. #subscription-manager repos --enable=rhel-7-server-extras-rpms

yum install -y containerd.io docker-ce docker-ce-cli # for specific version, please execute the below #yum install -y containerd.io-1.2.10 docker-ce-19.03.4 docker-ce-cli-19.03.4

[if you are unable to install container.io then run the below the run the above yum install]

Minikube, which is supposedly a simple way to run Kubernete for Docker, as advertised on Kubernete tutorial page, is…medium.com

yum install -y https://download.docker.com/linux/centos/7/x86_64/stable/Packages/containerd.io-1.2.6-3.3.el7.x86_64.rpm

Enable and start the docker

systemctl enable docker.service

systemctl start docker.service

Setup CNI for kubernetes network

modprobe overlay modprobe br_netfilter

cat > /etc/sysctl.d/99-kubernetes-cri.conf <<EOF

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

systemctl --system

yum-config-manager --add-repo=https://cbs.centos.org/repos/paas7-crio-115-release/x86_64/os/

yum install --nogpgcheck -y cri-o

systemctl enable crio.service

systemctl start crio.service

cat > /etc/sysctl.d/99-kubernetes-cri.conf <<EOF net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF

$ systemctl --system

Configure containerd

cat > /etc/modules-load.d/containerd.conf <<EOF overlay br_netfilter EOF

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

systemctl restart containerd

systemctl status containerd

Step : Install k8s — kubeadm, kubelets, kubectl

#Add k8s yum repo cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg EOF

Set SELINUX off

setenforce 0 sed -i ‘s/^SELINUX=enforcing$/SELINUX=permissive/’ /etc/selinux/config

Install k8s core components

yum install -y kubelet kubeadm kubectl --disableexcludes=Kubernetes

#For specific version I,e 1.17 #yum install -y kubelet-1.17.\* kubeadm-1.17.\* kubectl-1.17.\* — disableexcludes=Kubernetes

Step : Disable swap & Firewall

$ vi /etc/fstab ( # out all swap)

$ swapoff -a

$ free -h

#firewalld

$ systemctl status firewalld

$ systemctl stop firewalld

$ systemctl disable filewalld

Step : Single Master node setup

Remove old CNI if any

rm -rf /opt/cni/bin rm -rf /etc/cni/net.d

Initialize the master

$ kubeadm init --node-name master --apiserver-advertise-address=<<master node ip>> --pod-network-cidr <<network range>> --service-cidr <<network range>>

# example

$ kubeadm init --node-name master --apiserver-advertise-address=192.168.56.101 --pod-network-cidr 192.168.56.1/24 --service-cidr 192.168.56.1/24

Configure kubectl to access the cluster

mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

Setup the k8s internal networking using calico

$ kubectl apply -f https://docs.projectcalico.org/v3.9/manifests/calico-etcd.yaml

#or

# kubectl apply -f https://docs.projectcalico.org/v3.8/manifests/calico.yaml

Copy the token to join workers

kubeadm token create --print-join-command

Step: Add worker with single master— For each worker node

# install kubeadm, kubelet and kubectl then perform the below

Join as worker

$ kubeadm join 192.168.56.101:6443 --node-name worker1 --token nhms9j.lb3p7y0vydc8jgun --discovery-token-ca-cert-hash sha256:c305a3064c80997a671968739908fe4b8792f45237fad177fed75542494b465d

kuberctl get nodes (at master to check)

Step: Create user and give access to specific namespace

#Create namespace $ kubectl create namespace projectxnamespace # create certificate and sign with k8s certificate $ mkdir /home/appxuser/.kube && cd /home/ appxuser /.kube

Generate Certificate and sign with kubernetes certificate

$ openssl genrsa -out appxuser.key 2048 $ openssl req -new -key appxuser.key -out appxuser.csr -subj "/CN= appxuser " $ openssl x509 -req -in appxuser.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -out appxuser.crt -days 500

Create kubeconfig for new user

$ cp /etc/kubernetes/pki/ca.crt /home/ appxuser /.kube/

$ kubectl --kubeconfig appxuser.kubeconfig config set-cluster kubernetes --server https://192.168.56.101:6443 --certificate-authority=ca.crt --client-key= appxuser.key --client-certificate= appxuser.crt

$ kubectl --kubeconfig appxuser.kubeconfig config set-credentials appxuser --client-key appxuser.key --client-certificate appxuser.crt

$ kubectl --kubeconfig appxuser.kubeconfig config set-context appxuser-context --namespace projectxnamespace --cluster kubernetes --user appxuser

$ vi appxuser.kubeconfig # edit current context to appxuser-context

$ mv appxuser.kubeconfig config

Create a role for the new user

#role.yaml apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: appxuser

namespace: projectxnamespace

subjects:

- kind: User

name: appxuser

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: admin

apiGroup: rbac.authorization.k8s.io

kubectl apply -f role.yaml

HA Cluster with LB, multinode master(stacked etcd)

Perform above steps (Install container runtime & network interface, Install k8s — kubeadm, kubelets, kubectl, Disable swap & Firewall, Master node setup)

Step : LB(HA PROXY Config)

$ vi /etc/hproxy/haproxy.cfg

# add below entry

frontend Kubernetes

# LB ip

bind 192.168.56.104:6443

option tcplog

mode tcp

default_backend kubernetes-master-nodes

backend kubernetes-master-nodes

mode tcp

balance roundrobin

option tcp-check

# k8s master information

server k8s-master-0 192.168.56.103:6443 check fall 3 rise 2

server k8s-master-1 192.168.56.101:6443 check fall 3 rise 2

Step: Prepare 1st master/CP

Init the first master

$ kubeadm init --control-plane-endpoint “LB:LB_port” --upload-certs --node-name <master name> --apiserver-advertise-address=<master I,p> --pod-network-cidr <ip range> --service-cidr <ip range>

$ example:-> kubeadm init --control-plane-endpoint “192.168.56.104:6443”--upload-certs --apiserver-advertise-address=192.168.56.101 --pod-network-cidr 192.168.0.0/24 --service-cidr 192.168.0.0/24

Observe the below:

#Join master

$ kubeadm join 192.168.56.104:6443 --token fjrjj7.y94r4p5a37aevh6c \

--discovery-token-ca-cert-hash sha256:e7ac92b9be10695cc66cabc615cdafb4f07ddcc94e8b27d64107fb1337a3167a \

-- control-plane --certificate-key b6a2d427137072bfb5bad088ea33740551d1bb0035518925345fb3736592b15c

#Join worker

$ kubeadm join 192.168.56.104:6443 --token fjrjj7.y94r4p5a37aevh6c \

--discovery-token-ca-cert-hash sha256:e7ac92b9be10695cc66cabc615cdafb4f07ddcc94e8b27d64107fb1337a3167a

Configure kubectl

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

Configure networking with calico

$ kubectl apply -f https://docs.projectcalico.org/v3.9/manifests/calico-etcd.yaml

or

$ kubectl apply -f https://docs.projectcalico.org/v3.8/manifests/calico.yaml

Step : Join worker with HA cluster

Steps

1. Install container runtime & network interface

2. Install k8s — kubeadm, kubelets, kubectl

3. Disable swap & Firewall

Then below join generated during 1st master init

$ kubeadm join 192.168.56.104:6443 --token fjrjj7.y94r4p5a37aevh6c \

--discovery-token-ca-cert-hash sha256:e7ac92b9be10695cc66cabc615cdafb4f07ddcc94e8b27d64107fb1337a3167a

Step : Join additional master with HA cluster

Steps

1. Install container runtime & network interface

2. Install k8s — kubeadm, kubelets, kubectl

3. Disable swap & Firewall

Then below join generated during 1st master init

#below is to delete old CNI config $ rm -rf /opt/cni/bin && rm -rf /etc/cni/net.d

$ kubeadm join 192.168.56.104:6443 --token ryeudu.2gyxzumsm71lqzry --apiserver-advertise-address=192.168.56.103 \

--discovery-token-ca-cert-hash sha256:80a3e0fa3cfd0637806a0520ce51b6fa75a0f2366ea927b53168d2759877cc20 \

-- control-plane --certificate-key fa8854379cd9d65e02b5707bfdae5cf637288b16934786477900dd6092699f22